Natural Remedies Unveiled Essential Oils for Wart-Free Skin

Warts, those small, often unsightly growths on the skin caused by the human papillomavirus (HPV), can be a source of ...

Read more

SpaceX’s Vision for Tomorrow: Goals and Milestones for the Next Decade

SpaceX, the pioneering aerospace company led by Elon Musk, has captured the world’s imagination with its ambitious SpaceX Goals and ...

Read more

Rena Monrovia When You Transport Something by Car

Rena Monrovia specializes in optimizing space and ensuring the safety of belongings during car transport. Using cars for goods delivery ...

Read more

Pixel 3xl Iron Man Backgrounds

If you’re a fan of Iron Man and own a Pixel 3XL, you can enhance your device with dynamic wallpapers ...

Read more

Cocokick Shoes France Trendsetting Designs

Cocokick Shoes France has been making waves for a few reasons. Their focus on using real leather and high-grade textiles ...

Read more

Worst Behavior “Wrstbhvr” Clothing

“Worst Behavior Clothing” encompasses various streetwear brands like Good Behavior Brand, Bad Behaviour, and Fiendish Behavior, each offering unique apparel. ...

Read more

Vicinity Clothing Deutsch’s Fashion Magic

Vicinity Clothing is a German streetwear brand offering high-quality, ready-to-wear garments at fair prices. The brand caters to diverse individuals ...

Read more

Buy Spider Hoodie 555 Online

The Spider Hoodie 555 is a clothing line associated with Young Thug, also known as Jeffery Lamar Williams. This brand ...

Read more

Synaworld Clothing Brand UK

Synaworld is a popular clothing brand launched by UK rapper Central Cee. Known for its unique streetwear, the brand offers ...

Read more

Parur Clothing France Fashion Legacy

Parur Clothing is a prominent brand that hails from the fashion-forward landscape of France. Famous for its chic and sophisticated ...

Read more

Monterrain Clothing Brand UK

Monterrain Clothing is a new player in the fashion industry, aiming to provide quality clothing. Reviews of other clothing brands ...

Read more

Live Fast Die Young Clothing Brand

Live Fast Die Young (LFDY) is an international streetwear brand known for its authentic and trendy collections, including hoodies, tees, ...

Read more

Buy Eightyfive Clothing Online In Germany

Eightyfive Clothing is a streetwear brand known for its contemporary collections, offering a range of stylish streetwear items like ruffled ...

Read more

Broken Planet Clothing UK

Broken Planet Clothing is a London-based clothing brand known for its diverse range of apparel. From hoodies and sweatpants to ...

Read more

Where To Buy Bearbricks in France?

Bearbricks stands tall as an icon, seamlessly blending fashion with the allure of collectible toys. Created by the Japanese company ...

Read more

Buy 99Based Clothing Online

99Based clothing brand features unique collections like the Broken 99 Knit Sweater and Based Outline Jeans. Their online store offers ...

Read more

Essentials Clothing: Must-Have Essentials for Every Style

Essentials clothing refers to versatile, timeless pieces that form the foundation of a wardrobe, transcending fashion trends. These include: Origin ...

Read more

Cocokick Shoes: Stepping into Comfort

Cocokick Shoes brings a fusion of comfort, style, and sustainability to footwear. These shoes are crafted with innovation in mind, ...

Read more

Hellstar Clothing: A Stylish Revolution

Hellstar Clothing is a trendy streetwear label founded by American actor Sean Holland. Known for its urban style, the brand ...

Read more

Barriers Clothing: Best US Streetwear Brand

Barriers Clothing is a streetwear brand founded by Kwesi Ndu, focusing on educational and stylish apparel. The brand intertwines fashion ...

Read more

Minus Two Clothing: Never Go Out Of Style

Minus Two Clothing is a brand that embraces individuality and diversity, catering to those who feel outside the societal norms. ...

Read more

Carsicko Clothing: Next Best Streetwear Brand?

Carsicko is a prominent streetwear brand recognized for its striking logo prints and elegant designs. The brand has redefined street ...

Read more

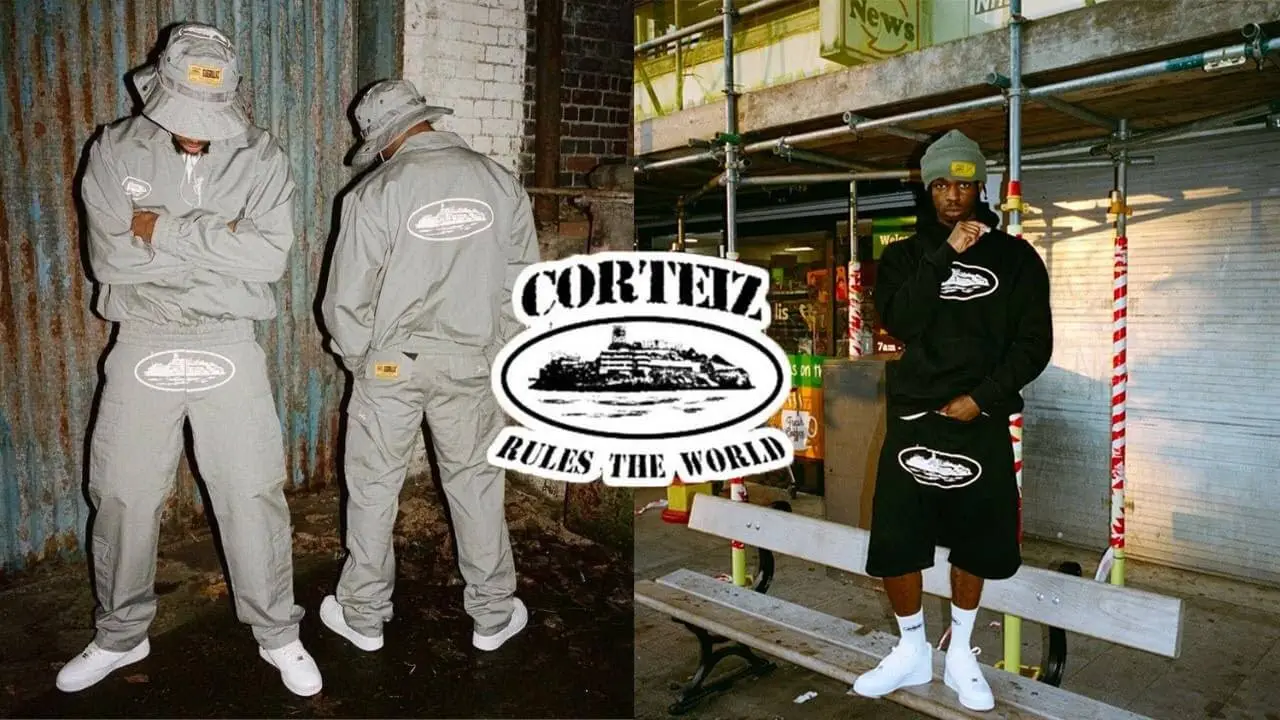

Corteiz Clothing: Fascinating Ready to Wear Collection

Corteiz Clothing offers high-end fashion for both men and women, including T-shirts, sweaters, pants, shorts, jackets, and accessories. The brand ...

Read more

8721g-G Mega Gloss 1 Part Marine Topside Polyurethane Enamel

8721g-G Mega Gloss 1 Part Marine Topside Polyurethane Enamel is a single-component polyurethane enamel specifically designed for marine use. It ...

Read more

Mughal Delhi Una Visita A Pie En Un Pequeño Grupo De Timeless Tale

The meaning of this term in Spanish, Mughal Delhi Una Visita A Pie En Un Pequeño Grupo De Timeless Tale, ...

Read more

Daily Game Video Game News and Reviews Sports D Blu Ray and Electronics

Daily Game is an online platform that provides news and reviews on video games, sports, Blu-Ray, and electronics. The platform ...

Read more

Dad Blog Uk Gestation And Lactation The Only Two Things Men Can’t Do As Parents

Gestation and lactation are two things of parenting that are unique to women. Let’s break them down. Gestation (Pregnancy): Gestation ...

Read more

Star Ocean Mall App: Is It Real Or Fake?

Star Ocean Mall app is a fraudulent app. It is not a legitimate app and is not recommended for use ...

Read more

Goth IHOP Ero Honey Health Benefits & Weight Loss Diet

Goth Ihop Ero Honey is a type of honey that is dark and has exquisite variety characterized by its rich, ...

Read more

Eedr River History, Ecosystem and Ecological Importance

The Eedr River is a beautiful waterway flowing through Germany. It winds its way through the picturesque landscapes of Hesse, ...

Read more