Home Remedies for Gray Hair

Home Remedies for Gray Hair Gray hair is often seen as a sign of aging, but it can occur at ...

Read more

Vegetarian Protein Sources

Vegetarian Protein Sources: A Comprehensive Guide Introduction In today’s health-conscious world, the importance of protein is well understood. It’s a ...

Read more

Simple Ways to Improve Your Digestive System

Simple Ways to Improve Your Digestive System A healthy digestive system is crucial for overall well-being, as it ensures that ...

Read more

Increase immunity include winter foods in your diet health tips

Increase immunity include winter foods in your diet health tips As winter approaches, many people find themselves more susceptible to ...

Read more

Health Benefits of Turmeric Tea

Turmeric tea, a popular beverage made from the root of the turmeric plant, has been celebrated for its potential health ...

Read more

Simple Ways to Improve Your Digestive System

Simple Ways to Improve Your Digestive System The human digestive system is a complex network of organs and processes that ...

Read more

How To Choose A Drug Addiction Rehab Center?

Choosing the right drug addiction rehab center is a critical step toward recovery. With numerous options available, making an informed ...

Read more

Raw Banana Flour: Benefits and Uses

Raw banana flour, derived from unripe green bananas, has been gaining attention as a versatile and nutritious ingredient. It offers ...

Read more

Vitamin E: Health Benefits and Nutritional Sources

Introduction Vitamin E, a fat-soluble antioxidant, plays a crucial role in maintaining overall health. It is essential for immune function, ...

Read more

Lemon Juice: Natural Home Remedies for Removing Dark Spots

Dark spots, also known as hyperpigmentation, can be a common skin concern for many people. These spots can result from ...

Read more

Health benefits of lemon oil

Lemon oil, derived from the peel of fresh lemons through a cold-pressing process, is celebrated for its numerous health benefits. ...

Read more

Morning coffee tips with no side effect

For many people, morning coffee is an essential ritual that kick starts their day. The aroma and the warmth of ...

Read more

Health benefits of turmeric tea

Turmeric tea, often praised for its vibrant color and unique flavor, has been used for centuries in traditional medicine, particularly ...

Read more

Ayurvedic Dinner Recipes for Balancing Your Dosha According to Ayurveda

Ayurvedic dinner, the ancient Indian medicinal system, emphasizes the importance of balancing your dosha for overall well-being.Ayurvedic Dinner plays a ...

Read more

Unveiling the Incredible Health Benefits of Jaggery and Its Sweet Secrets for Wellness

Jaggery, a traditional Indian sweetener, offers a plethora of amazing health benefits that can contribute to overall wellness. Consuming jaggery ...

Read more

The Science Behind How Protein Can Help You Lose Weight Naturally

The Science Behind How Protein Can Help You Lose Weight Naturally Overview of protein and its role in weight loss ...

Read more

Top 10 Vegetarian Protein Sources for a Balanced Diet

As more people adopt vegetarian and vegan lifestyles, the search for high-quality protein sources becomes crucial. The best vegetarian protein ...

Read more

WellHealthOrganic.com Honest Customer Review

At its core, Well Health Organic is more than just a brand; it’s a commitment to providing consumers with access ...

Read more

How to Increase Immunity Include Winter Foods In Your Diet Health Tips In Hindi?

Immunity is our body’s defense system against harmful invaders like bacteria, viruses, and other pathogens. It’s like having an internal ...

Read more

The Science of Appearance Men’s Fashion Grooming and Lifestyle

The science of appearance men’s fashion grooming and lifestyle explores how various elements contribute to one’s overall look and the ...

Read more

Southern Flair a Fashion and Personal Style Blog

Southern Flair is a lifestyle blog based in Baton Rouge, Louisiana, created by Leslie Personal. This blog focuses on Southern ...

Read more

Biocentrism Debunked: Is It Credible?

Biocentrism is an ethical perspective that assigns inherent value to all living things, considering them deserving of equal moral consideration. ...

Read more

Wellhealthorganic Buffalo Milk Tag

Nutrition Profile: Protein, Calcium and More Understanding the nutrition profile of foods is crucial for maintaining a healthy diet. Let’s ...

Read more

How to Purchase Iraqi Dinar on IQDBUY.COM?

IQDBUY.COM is your premier online currency store offering the best deals on Iraqi Dinar purchases and other Middle Eastern currencies. ...

Read more

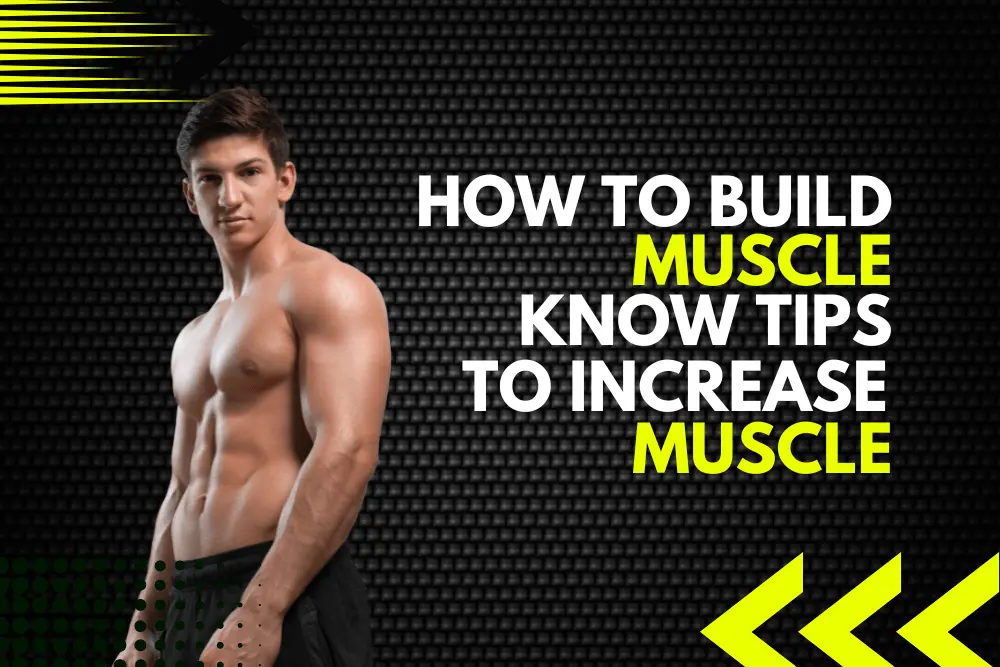

wellhealthorganic.com/how-to-build-muscle-know-tips-to-increase-muscles

For building muscle you need to follow certain tips which are discussed here. Set clear goals for your muscle-building journey, ...

Read more

Dream Wedding Venues in Singapore

Planning a wedding in Singapore can be overwhelming, but fear not! We’ve curated a complete guide to help you find ...

Read more

Crafting Engaging Content: An In-Depth Guide to Creating YouTube Videos

In the present electronic age, YouTube has emerged as a serious area of strength for content creators to parade their ...

Read more

Influencer-Inspired Green Dresses to Make a Statement

Planning to buy your next prom, party, or any other occasion dress? Green should be the colour. One of the ...

Read more